The Productive Brain

The human brain is a powerful resource. It is the source of our creativity. We use it to learn new things and record our life experience. Thus, it is the cradle of our skills. We have all had the fundamental human experience of growing our skills through learning and practice. We have also had the experience of atrophy when we have declined to put in that effort.

AI marketing seems to consistently tout productivity gains, yet companies repeatedly focus on how developers feel, rather than how productive they really are. In reality, we allow our own creativity to atrophy the moment we let the AI do a task for us. Who (or what) is putting in that effort?

How do we know what impact AI really has on us? Recent objective studies have been conducted to measure just that! It is worth noting that these studies are under peer review at the time of writing this blog article. However, at Amplify, we feel this is important enough to discuss right now.

What can we learn from these studies?

The human brain is a powerful resource. It is the source of our creativity. We use it to learn new things and record our life experience. Thus, it is the cradle of our skills. We have all had the fundamental human experience of growing our skills through learning and practice. We have also had the experience of atrophy when we have declined to put in that effort.

AI marketing seems to consistently tout productivity gains, yet companies repeatedly focus on how developers feel, rather than how productive they really are. In reality, we allow our own creativity to atrophy the moment we let the AI do a task for us. Who (or what) is putting in that effort?

How do we know what impact AI really has on us? Recent objective studies have been conducted to measure just that! It is worth noting that these studies are under peer review at the time of writing this blog article. However, at Amplify, we feel this is important enough to discuss right now.

What can we learn from these studies?

Software Development Productivity

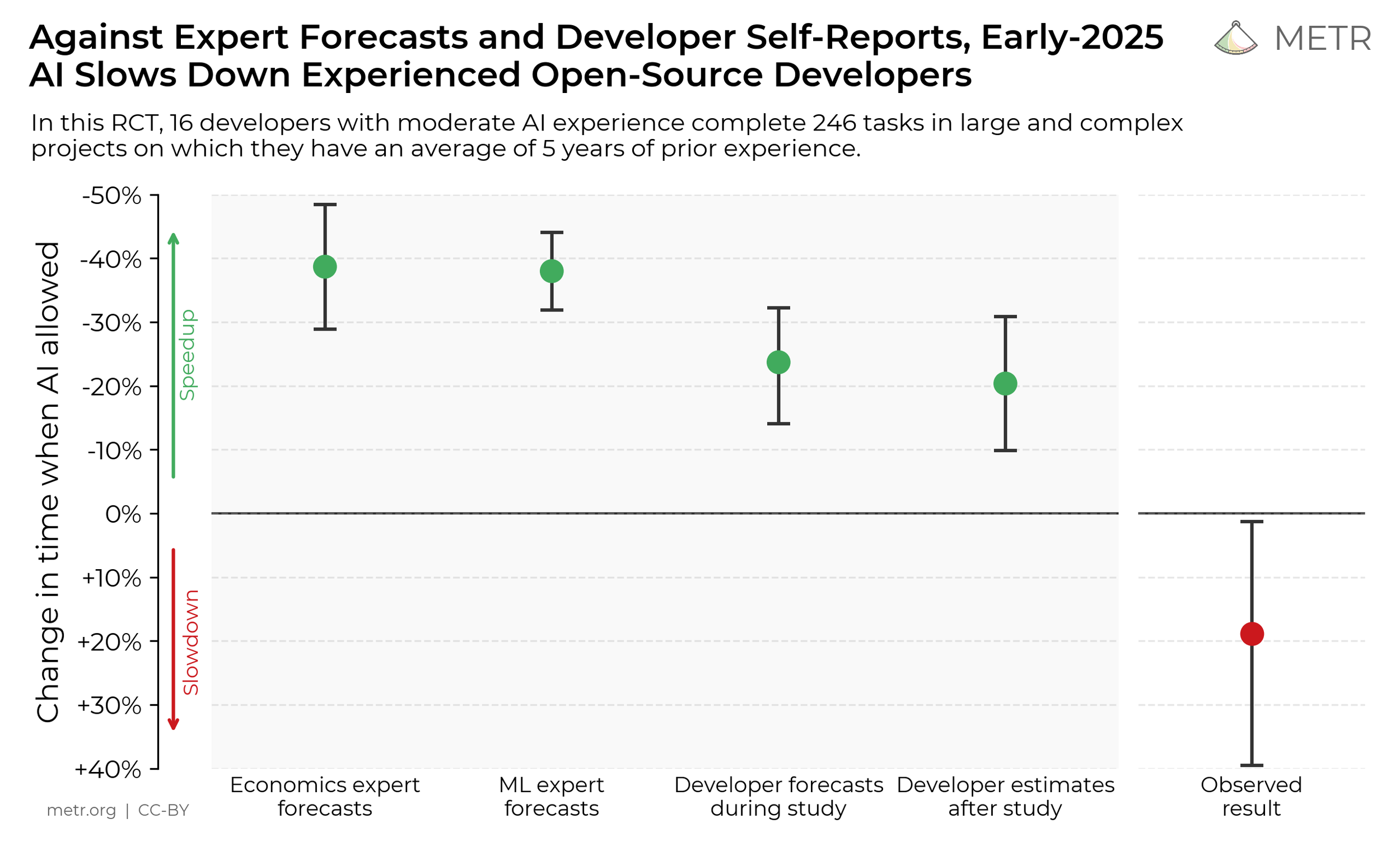

Joel Becker, Nate Rush, Elizabeth Barnes, and David Rein at METR conducted a randomized controlled trial measuring software developer productivity. In this trial, they employed 16 experienced open source developers to work on their own repositories using real issues that provided real world value to the repositories. These issues were randomly assigned as AI-enabled or AI-free and given to the developers to work on while recording their screens.

Before the exercise began, experts in the field and the participants all estimated that AI would improve productivity. Once finished, the participants reflected on their experience and gave another estimate on the impact of AI during the study. All provided estimations (pre- and post-experiment) claimed a productivity boost.

What do you think METR found?

“When developers are allowed to use AI tools, they take 19% longer to complete issues—a significant slowdown that goes against developer beliefs and expert forecasts. This gap between perception and reality is striking: developers expected AI to speed them up by 24%, and even after experiencing the slowdown, they still believed AI had sped them up by 20%.”

These results are easier to see in their own chart on their website:

The contrast between how we feel about productivity with AI vs empirical results showing a reduction of productivity with AI.

Interesting! We have objective data to highlight a hole in the AI marketing hype! Subjective self-reports are hiding the objective truth. No wonder these companies don’t want to measure productivity objectively.

Neural Connectivity in Essay Writing

Nataliya Kosmyna at MIT conducted a study with 54 students who were given tasks of essay writing on high-level concepts. They all wore EEG caps to measure how brain activity differed between the brain-only group (control) and the two tech-supported groups: one with a search engine, another with ChatGPT.

Kosmyna made a critical observation which is directly applicable to all of us humans:

“Brain connectivity systematically scaled down with the amount of external support: the Brain‑only group exhibited the strongest, widest‑ranging networks, Search Engine group showed intermediate engagement, and LLM assistance elicited the weakest overall coupling.”

How does AI affect us?

“The use of LLM had a measurable impact on our participants, and while the benefits were initially apparent, as we demonstrated over the course of 4 sessions, which took place over 4 months, the LLM group’s participants performed worse than their counterparts in the Brain-only group at all levels: neural, linguistic, scoring.”

It would behoove us to pay special attention to that last finding. AI presents an immediate benefit coupled with long-term degradation. Effort stimulates the brain. Avoiding effort makes us dumber.

What Do You Want For Your Brain?

The results of these studies are verifiable in our own lives! Those fundamental human experiences of growth and atrophy are seen in these studies. If your brain is important to you like ours is to us, we need only to adopt one mindset to change our lives.

In Mindset, Dr Carol Dweck identified two mindsets: the fixed mindset and the growth mindset. A fixed mindset individual generally shies away from challenge and effort while feeling a potential threat against identity. Thus, they find their growth slowing down to a crawl. On the other hand, a growth mindset individual takes on ever-harder challenges while feeling a thrill to test one’s own limits. Thus, they experience the joy of continual growth!

We have all seen the deleterious effects of curation AI in social media: doom scrolling, increased anxiety, reduced attention spans, worsening mental health, and many more issues. How will generative AI affect human brains after 5 years of consistent use? How about after 10 years? What happens to the brains of our children in a world where generative AI is normal?

At Amplify, we choose to use our brains. Even if AI eventually overtakes humans in every real world use case, we will still be human. We will still need practice to grow our skills. Without practice, skills atrophy. With practice, skills grow. We believe the only sustainable path to ongoing creativity is to practice what we learn.

Humans created hardware. Then we created software. No AI was present when that happened, meaning software is a true FUBU idea: For Us, By Us. We author software as developers. We use software as customers. We are the purpose.

It’s a Trap

The AI marketing train has been going full steam for a while now and many have been caught up in the hype. Some have lost hope in humanity, lost faith in the future, and sunk towards depression with thoughts of a purposeless life in a world without a job to be had. These experiences are deeply personal; they are most uncomfortable.

The marketing train goes something like this: “AI is here! Look at these benchmarks! It’s already smarter than us! It is the last human invention and will automate all jobs, but you’ll lose your job before then to those who use AI… unless, of course, you use our AI first!” What a wildly productive message! The marketing hype uses some modicum of truth (we have some version of AI), with false equivalency (as if benchmarks resembled real life), and quickly launches the imagination into a possible future anchored in a claim about intelligence in the form of prophecy about jobs.

That message creates the emotional context of fear. Fear of loss. Fear is incredibly motivating. Daniel Kahneman showed that the motivation to avoid a loss is 2x stronger than the motivation to gain the same thing. Fear even sells better than sex.

The AI marketing train has been going full steam for a while now and many have been caught up in the hype. Some have lost hope in humanity, lost faith in the future, and sunk towards depression with thoughts of a purposeless life in a world without a job to be had. These experiences are deeply personal; they are most uncomfortable.

The marketing train goes something like this: “AI is here! Look at these benchmarks! It’s already smarter than us! It is the last human invention and will automate all jobs, but you’ll lose your job before then to those who use AI… unless, of course, you use our AI first!” What a wildly productive message! The marketing hype uses some modicum of truth (we have some version of AI), with false equivalency (as if benchmarks resembled real life), and quickly launches the imagination into a possible future anchored in a claim about intelligence in the form of prophecy about jobs.

That message creates the emotional context of fear. Fear of loss. Fear is incredibly motivating. Daniel Kahneman showed that the motivation to avoid a loss is 2x stronger than the motivation to gain the same thing. Fear even sells better than sex.

Beware the Corporate Agenda

Let’s take a look at GitHub and its Copilot product in the early stages:

GitHub took on an enormous investment to develop Copilot using unproven generative AI tech;

Copilot launched as an unpaid technical preview in 2021, generating zero revenue;

They conducted a study and published an article in 2022, spending even more time, effort, and money; and

They wrote a follow up blog post to show just how “good” their tool really was.

That was a lot of money tied up in unrealized inventory. How badly would you want a gigantic investment to pay off? What do you think GitHub would have done to make that happen?

Skewing the Subjective

GitHub shared that productivity is “difficult to measure”, productivity to a developer is like “having a good day”, and “satisfied developers perform better”. They painted a picture where objective measurement was too hard, thereby elevating the importance of the subjective. They alluded to the objective by using the SPACE framework to measure productivity, but with a stated focus on Satisfaction and well-being and Efficiency and Flow, both of which are subjective. They provide charts with stats and numbers, which imply objectivity; however, they are merely aggregations of more subjective self-reports. They even admit what they did in their paper:

“We will thus focus on studying perceived productivity”

That emphasis is theirs. They didn’t study productivity, they studied perceived productivity. GitHub found a group of early adopters who were predisposed to a positive emotional state, crafted a study which leaned on cognitive bias instead of measuring objective value, and ran a marketing campaign with a crafted “study” to capitalize on a gigantic investment. We all have biases; they took advantage of them. We simply feel more productive because it takes less effort to offload our skilled work, but how do we know if we are actually more productive?

Their chart in their first section of the blog post reveals much. Research conducted to uncover the truth uses neutral phrasing to avoid bias, like “how did this impact your productivity?” Yet their questions were written to psychologically prime the reader for a positive answer. They are still using the same tricks today. You can see it in their survey engine in questions like, “How much less time did the coding take during this PR with Copilot?” (Emphasis mine.)

So much effort was spent measuring the subjective and advertising it as objective. Yet despite all of their claims about the difficulties in conducting objective measurements with disagreed-upon metrics of what constitutes productivity, they proved they knew exactly how to do it. It’s how the industry has been doing it for ages.

Skewing the Objective

The blog post has a single objective measurement, and it did not appear in the "perceived productivity" study. They measured that it took developers 55% less time to complete the task with Copilot! What an amazing find! Dollars were sure to roll in! The chart in that section is flashy and draws attention. It’s easy for us to gloss over the setup of the study:

“…write an HTTP server in JavaScript.”

What are the most difficult things for an AI to accomplish? Everything that was stripped out of that test:

Understanding the existing code;

Correctly rationalizing complexities of the business rules and its domain; and

Knitting new changes together with the old code and logic in a manner which increases quality.

None of those difficulties were in their highly synthetic and ridiculously simple test case.

What have we seen AI generally succeed at? Greenfield development of a tightly-defined and well-known problem which is repeatedly documented in training data. That one test was specifically designed to showcase Copilot in the absolute best light possible, which they had already discovered through use of Copilot in the technical preview.

It is all a marketing ploy.

GitHub is not alone in this. In general, companies pushing AI avoid legitimate objectivity, mask the subjective as objective, and use existential fear to drive sales. How do we feel about that?

Where Do We Go From Here?

We encourage every developer to capitalise on the absolute best tool they have: the human brain. Your brain has been engineered to learn, which enables you to increase your rate of progress and accuracy over time. It is the practice of our skills which stimulate growth. How much practice of real software development do we get when we offload our skills to an AI?

The first step is to do the work ourselves to stimulate our own growth. The next is to find tools which boost the right kinds of measures. We even have a tool for you: Easely. Easely solves common issues with modern software development.

It is designed for your brain; the churn of cognitive load is constrained to the tool, allowing you to allocate those extra mental resources to the task at hand;

It transforms the process of development into a system of learning to uncover the next right step as quickly as possible; and

It streamlines the process of pulling a solution from our mind.

One step at a time, you’ll write the only code that is needed.

The Need for Speed

Leadership, management, and engineering all recognise the need for speed; the need of a business to make money. Leadership feels this urgency and is incentivised to meet the customer’s needs through sales, thus keeping the business alive. Management is incentivised to drive product development to go ever faster to beat the competition. Engineering tries to solve the problem their way: produce more code more quickly! Yet many of us are left with the frustrating experience of watching our productivity trend ever downwards. Whether or not we have reports and metrics, we often feel that there’s something wrong. We feel like we should be going faster. How can this be?

Leadership, management, and engineering all recognise the need for speed; the need of a business to make money. Leadership feels this urgency and is incentivised to meet the customer’s needs through sales, thus keeping the business alive. Management is incentivised to drive product development to go ever faster to beat the competition. Engineering tries to solve the problem their way: produce more code more quickly! Yet many of us are left with the frustrating experience of watching our productivity trend ever downwards. Whether or not we have reports and metrics, we often feel that there’s something wrong. We feel like we should be going faster. How can this be?

Tech companies advertise the use of Agile in order to gain speed, yet engineers observe that work is piling up faster than we can deliver. The result: an ever-growing backlog we can see. We notice the problem and try various options to speed up, the latest of which are copilot tools and vibe coding. However, that doesn’t have the desired effect as AIs produce more code with more errors. We live in a world with tools to create an infinite amount of code, but how valuable is quantity with unknown quality? In fact, how valuable is any quantity?

This issue becomes obvious when we observe the whole system through the lenses of Lean Manufacturing and the Theory of Constraints. Lean identifies waste as anything which does not provide value to the customer, be that a bug or undesired feature. Therefore, productivity is measured by doing the right things, not lots of things. The Theory of Constraints tells us that the tightest bottleneck decides the throughput of a system. Therefore, we need to address the critical constraint in the pipeline. That’s usually in engineering.

The typical system of development yields enormous waste and exorbitant costs as we repeatedly spend time, money, and effort on things we don’t even know we need. We ship complex features, with lots of code, and provide ongoing support, thereby raising the effective cost of the business. Every time we release something unverified as needed, we add burden to the entire organisation in the hope that we find success.

Ideally, we want to pull what is needed from our limited pool of precious resources. We need some way to discover what we actually need to do. How do we know which units of work actually drive success?

How do we Know?

This one question cuts to the quick, separating fact from feeling. We are biased creatures who use feelings to guide our decisions on a regular basis - we “trust our gut”. As common as this phrase appears in conversation, it’s short sighted. Trusting our gut can be valuable, but its value pales in comparison to data. We can uncover this data and drive our learning. At Amplify, we have found a process to uncover the truth which directs our next steps. This process enables us to answer the question, “how do we know we are building the right thing?”

Validated Learning

Eric Ries identifies a critical unit of measure in The Lean Startup which determines business success: validated learning. The more golden nuggets of learning we have, the brighter the light on the path ahead. Validated learning is directly applicable from leadership’s zoomed-out perspective on strategy all the way to the zoomed-in process of our SDLC. We uncover validated learning in engineering by iteratively running small experiments on our code. We then discover the minimum set of required changes to meet the needs of what came immediately before, and experiment again.

The faster we learn, the faster we meet these needs.

Enter Easely, Stage Right

The Mikado Method is a process of experimentation and discovery (iterative learning), to select what is required (filter out waste, reduce operating expense), and systematically address the correctness of the feature (productive, valuable) with less code (reduce inventory), allowing us to ship faster (increase throughput). This process effectively trims out over-engineering at the start, thereby eliminating whole sets of engineering-led premature optimization (we don’t even know we are doing), which shrinks the size of each feature. You will discover how simple your solutions can be.

The Mikado Method is the core process inside Easely. We have taken the Mikado Method, supercharged it, wrapped it in a plugin, and embedded it into the IDE. The power is at your fingertips.

The speed of development with this process is directly related to the speed of discovering validated learning. This means the fastest way to increase productivity is to go through the loop of the Mikado Method as fast as possible. At Amplify, we know this to be true. Our primary goal with Easely is to shorten the loop as much as possible, enabling you to learn as fast as possible.

You can affect the right change, Easely.